How to Deploy AWS CloudFormation Templates Using Azure DevOps Pipelines

It is very common for a company to have multiple cloud environments. Let’s say that your company has an AWS and Azure environment. Your team already works day-to-day with Azure DevOps (ADO). The repositories, pipelines, secrets are all managed by Azure DevOps. But for whatever reason, now you need to spin up an S3 bucket in AWS. Azure DevOps and AWS have you covered. Microsoft and AWS provide the AWS Toolkit for Azure DevOps pipelines.

In this blog, we will go through the steps on how to deploy a cloud formation template using the AWS Toolkit in Azure DevOps. Also, there will be some best practices introduced to get a more robust and clean deployment.That’s about it, let’s start!

Prerequisites

- An Azure DevOps environment.

- The AWS Toolkit installed.

- The agents we use need to have the AWS CLI installed.

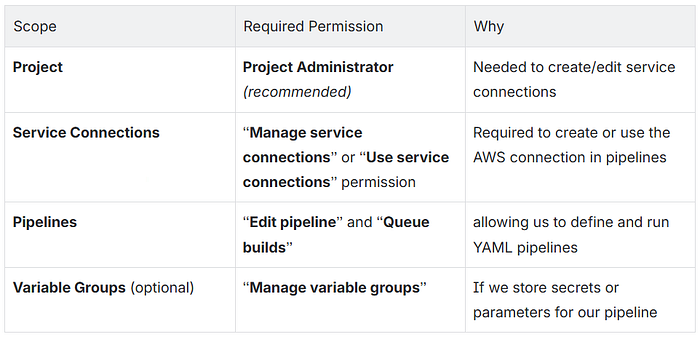

- An Azure DevOps account with these rights:

Press enter or click to view image in full size

Minimum AWS IAM permissions

(In production, consider custom least-privilege policies.)

- AWSCloudFormationFullAccess

- AmazonS3FullAccess

Instructions

1. Creating a service connection

On the Azure DevOps overview page of the project, navigate to the “Project settings” in the bottom left corner:

Then navigate to “Service connections“:

Here we can create a “new service connection” in the top right corner. Select AWS and continue with the “Next” button:

For simplicity, we focus on three parameters: Access Key ID (optional), Secret Access Key (optional) and Session token. They are all retrieved from the AWS Access portal.

In the AWS portal we can select an account. Once we selected, we will see all the roles available for this Account. Make sure to select the one with the minimum required permissions. Clicking “Access Key” will display a screen with the Access Key ID, Secret Access Key, and Session Token. Copy them and insert them into new service connection.

Note: This uses a session token. If you want to use this pipeline permanently, you will need permanent keys.

At the bottom of the service connection there are “Service connection details”. Add a name and store it, because we need to reference it later. Final step is to press “save“ and the Service connection should be created.

2. Creating the environment on Azure DevOps

Creating the environment is part of the deployment. To make the environments understandable, the name will have to reflect the environment we are deploying to. For this blog, we will just use “test”, but my suggestion is to name it according to your target environment. That would be “AWS test“ in this case. This will help to distinguish environments if there are deployments to different sources.

On the Azure DevOps project screen, navigate to the Pipelines tab in the left nav bar.

Select the Environment tab under the pipelines’ category.

In the top right corner press “New environment”.

It will show a new side bar where we will add the name and nothing else. We will not add any resources yet, because later we will link the deployment of the CloudFormation template with the environment.

The final step is to press “Create”.

3. Creating the configuration file for pipeline

The main focus of this blog is not the CloudFormation template, but the Azure DevOps pipeline. For this reason, no details will be provided about the CloudFormation template itself. The only information relevant to the pipeline is where the template is stored and whether a parameter file is used — and yes, we will be using one. If you’re interested in learning more about CloudFormation templates, I’d like to refer you to the official documentation.

In this blog we will be using a repository in the Azure DevOps project.

Below is the file structure that will be used in this blog:

{repo-name}

|--> s3

|--> cf-params.yml

|--> cf-template.yml

|--> azure-pipeline.yml

|--> pipeline-templates

|--> cf-deploy-template.ymlGeneric Deployment Template — cf-deploy-template.yml

The pipeline template will use one stage with multiple jobs, and each job can contain multiple tasks.

This structure makes it easy to reuse the same pipeline for different environments (e.g., dev, test, prod).

The pipeline jobs will be as follows: Create the changeset → print the changeset → wait for manual approval → either execute the changeset or delete it.

parameters:

- name: environment

type: string

stages:

- stage: Deploy

displayName: "Deploy to $(environment)"

jobs:

- job: CreateChangeSet

- job: ShowChangeSet

- job: WaitForApproval

- job: DeployChangeSetParameters

At the top of the file we will define the parameters. These can be given a value in any pipeline stage that references this template. The parameters:

Press enter or click to view image in full size

parameters:

- name: awsCredentials

type: string

- name: awsRegion

type: string

- name: environment

type: string

- name: stackIdentifier

type: string

- name: stage

type: string

- name: templateFilePath

type: string

- name: templateParametersFilePath

type: stringCreateChangeSet

The first step is to create a CloudFormation change set using the CloudFormationCreateOrUpdateStack@1 task. This task allows us to either create or update a CloudFormation stack directly from the pipeline.

Before defining the task, we need to check out the repository so the pipeline can access the CloudFormation template and parameter files. For the deployment a changeset is required and this changeset cannot be executed yet. All the other configuration are references to the parameters of the pipeline.

stages:

- stage: ${{ parameters.stage }}

jobs:

- job: CreateChangeSet

displayName: 'Create CloudFormation Change Set'

steps:

- checkout: self

- task: CloudFormationCreateOrUpdateStack@1

displayName: 'Create CloudFormation Change Set'

inputs:

useChangeSet: true

autoExecuteChangeSet: false

changeSetName: '${{ parameters.stackIdentifier }}-changeset'

awsCredentials: '${{ parameters.awsCredentials }}'

regionName: '${{ parameters.awsRegion }}'

stackName: '${{ parameters.stackIdentifier }}-stack'

templateFile: '${{ parameters.templateFilePath }}'

templateParametersFile: '${{ parameters.templateParametersFilePath }}'ShowChangeSet

For the next job, the change set should be printed so that the admin can review it. For this we will use the AWSCLI@1 task. This comes with the AWS Toolkit, but as mentioned in the prerequisites it is still required to have the CLI installed on the pool. This task can only run when the changeset is created, therefore dependsOn: CreateChangeSet is added.

For more information about the AWS CLI, see the official documentation.

- job: ShowChangeSet

dependsOn: CreateChangeSet

displayName: 'Show the change set.'

steps:

- task: AWSCLI@1

displayName: 'Show the change set'

inputs:

awsCredentials: '${{ parameters.awsCredentials }}'

regionName: '${{ parameters.awsRegion }}'

awsCommand: 'cloudformation'

awsSubCommand: 'describe-change-set'

awsArguments: "--stack-name ${{ parameters.stackIdentifier }}-stack --change-set-name ${{ parameters.stackIdentifier }}-changeset --output table"WaitForApproval

Similar to the previous job the “wait for approval” job is depended on the job before. The pool is set to server because this task does not need to run on an agent. The job timeout is set to one day. If one days passes and nothing is done by the admin, the job will automatically fail. And therefore the changeset will be removed.

- job: WaitForApproval

dependsOn: ShowChangeSet

displayName: 'Manual Approval'

pool: server

timeoutInMinutes: 1440

steps:

- task: ManualValidation@0

inputs:

instructions: 'Please review the change set in AWS Console and approve.'DeleteChangeSet & DeployChangeSet

Both jobs depend on the outcome of the WaitForApproval job. The key distinction between them lies in the condition defined for execution — condition: failed(). This condition ensures that the DeleteChangeSet job only runs when the WaitForApproval job fails, which happens when the manual approval is either rejected or times out.

- job: DeleteChangeSet

dependsOn: WaitForApproval

condition: failed()

displayName: 'Delete Change set after rejection.'

steps:

- task: AWSCLI@1

displayName: 'Delete change set'

inputs:

awsCredentials: '${{ parameters.awsCredentials }}'

regionName: '${{ parameters.awsRegion }}'

awsCommand: 'cloudformation'

awsSubCommand: 'delete-change-set'

awsArguments: "--stack-name ${{ parameters.stackIdentifier }}-stack --change-set-name ${{ parameters.stackIdentifier }}-changeset --output table"In the DeployChangeSet there is ta new nested structure starting at strategy. Because we are deploying we need to add this. There are multiple options after strategy that can be used but because we are only required to deploy once, that will be exactly what we will do runOnce. The final configuration is deploy which will define the actual steps to deploy.

- deployment: DeployChangeSet

displayName: 'Execute Change Set.'

environment: '${{ parameters.environment }}'

dependsOn: WaitForApproval

strategy:

runOnce:

deploy:

steps:

- task: CloudFormationExecuteChangeSet@1

displayName: 'Execute CloudFormation Change Set'

inputs:

awsCredentials: '${{ parameters.awsCredentials }}'

regionName: '${{ parameters.awsRegion }}'

stackName: '${{ parameters.stackIdentifier }}-stack'

changeSetName: '${{ parameters.stackIdentifier }}-changeset'Specific S3 bucket pipeline — azure-pipeline.yml

This is the main pipeline file that ties everything together. It passes the necessary parameters to the deployment template. The pipeline is triggered automatically whenever changes are made to the main branch under the s3 folder.

In the variables section, generic variables are defined — these are variables that can be used a cross different environments(stages). Finally, the cf-deploy-template.yml template is referenced and all required parameters are passed into it, including the AWS region and service connection name.

trigger:

branches:

include:

- main

paths:

include:

- aws/s3/**

pool:

name: 'ReleaseAgentPool'

variables:

- name: templateFilePath

value: 'aws/s3/cf-template.yml'

- name: templateParametersFilePath

value: 'aws/s3/cf-params.yml'

- name: stackIdentifier

value: 's3'

stages:

- template: /azure-pipeline-templates/test.yml

parameters:

awsCredentials: 'logstash-tfs-dev'

awsRegion: 'eu-north-1'

environment: 'test'

templateFilePath: '$(templateFilePath)'

templateParametersFilePath: '$(templateParametersFilePath)'

stackIdentifier: '$(stackIdentifier)'

stage: 'test'4. Creating the pipeline in Azure DevOps

On the Azure DevOps project screen, navigate to the Pipelines tab in the left nav bar.

Select the Pipelines tab under the pipelines category.

The next step(s) will be different depending on your setup. In this blog we have been using the Azure Repos Git, but there are multiple different sources that can be selected.

Select the Azure Repos Git.

The next step asks us to select the repository, followed by a prompt that asks us where the configurations are stored. This requires a reference not to the template pipeline, but to the azure-pipeline.yml file in the s3bucket folder.

Press enter or click to view image in full size

Finally the pipeline will request access to the required resources. After we have granted them the pipeline will run.

5. Finishing up

Within the ShowChangeSet job, a similarly named task allows the admin to review the change set.. By opening this after it is finished the admin can see the changeset and make a decision on whether to deploy or not.

After everything is correct and approved you should see the satisfying look of the beautiful green checks.

Not 100% idempotent!

A known issue with this pipeline is the fact that it does not delete any stack, just the changeset. If for whatever reason you want to remove the entire stack, the admin will have to do it manually.

Best practices summed up

Some of these were explicitly mentioned and others have been inserted in the examples. For convenience they will all be summed up once more.

- Use Service Connections Safely

Create an AWS service connection in Azure DevOps with the minimum required permissions.

Use session tokens carefully; for permanent pipelines, use permanent credentials. - Environment Naming and Organization

Name Azure DevOps environments clearly (e.g., AWS test, prod) to avoid confusion across multiple environments. - Reusable Pipeline Templates

Use a generic deployment template (cf-deploy-template.yml) with parameters for credentials, region, environment, stack name, and template paths to enable flexibility and reusability across multiple pipelines and stages. - Change Set Workflow

Always create a CloudFormation change set first.

Print the change set for admin review before execution to prevent unintended infrastructure changes. - Manual Approval

Include a manual approval step before executing changes to ensure safe, deliberate deployment.

Set a timeout with proper failure handling for unattended approvals. - Separate Execution and Cleanup

Use conditional jobs: delete change sets on failed approval but don’t automatically delete stacks, maintaining safety and control. - Modular and Repeatable Structure

Structure pipelines into stages and jobs for clarity and reusability.

Keep deployments consistent and predictable.

Summary:

This summary was generated by AI to provide a concise overview of the blog content.

In multi-cloud environments, teams often need to manage resources across both AWS and Azure. For organizations already using Azure DevOps (ADO), the AWS Toolkit enables seamless deployment of AWS resources directly from pipelines.

This blog walks through deploying an AWS CloudFormation template via Azure DevOps. It covers setting up an AWS service connection, creating a deployment environment, and defining a reusable pipeline template that structures jobs into distinct steps — creating, reviewing, and approving change sets before execution. Manual approval ensures that all changes are reviewed, while automated tasks handle deployment safely and consistently.

The guide also emphasizes best practices: using least-privilege credentials, clear environment naming, reusable templates, and proper cleanup conditions. Although not fully idempotent — since stacks aren’t automatically deleted — this approach provides a robust, flexible, and auditable framework for deploying AWS infrastructure through Azure DevOps pipelines.

Want to know more about what we do?

We are your dedicated partner. Reach out to us.