The Evolution of AWS from a Cloud-Native Development Perspective: Data, Sustainability, Builder Experience, and Serverlessification

AWS doubles down on its initiatives announced at re:Invent 2021. Data, Sustainability, and a lower barrier to integrating services and onboarding developers seemed to be the recurring themes for AWS re: Invent 2022. Now that 5 months have passed, it seems like a good moment to re:Cap and evaluate what happened in the world of AWS post re:Invent ‘22.

In this blog post, we will walk you through the most important movements announced by AWS at re:Invent 2022 and discuss the impact on AWS cloud-native builders.

Lingering impressions

Like the ‘21 edition, the ‘22 edition of the AWS annual conference didn’t feature any game-changing announcements, but quite some very nice enhancements on existing services. Next to that, AWS seems to pivot into developing verticals: Services that cover a specific industry. Verticals can be really beneficial to customers that don’t have the capital to invest in developing their own solution or don’t want to be distracted by developing services that are not their core business. However, the need to spin up the innovation flywheel to find product-market-fits is more important than ever, because with the announcement of AWS Supply Chain, AWS has proven that it can and will take over your commodity business. The AWS cloud is maturing at a pace that could make a lot of businesses irrelevant.

Nowadays we have many ways to store data in the cloud. The next step is to have tools and knowledge to make use of this data. AWS is focusing on this next step with their announcements at re:Invent 2022.

A lot of effort is being put into improving the builder experience. AWS wants you to be able to build as much as you can as fast as possible on their platform, whoever you might be. It does not matter if you are a seasoned developer or have absolutely no experience, there is an entry point for you. Or at least, that is the goal.

Making more use of the term ‘serverless’ has also been on the agenda of AWS since 2021. We have seen new serverless versions of existing services arrive, even though not all of them can scale down to 0 and some have even an entry cost. While on the one hand, it is always nice to have options, the other side of the story is that we need to have a discussion about what the term serverless means nowadays.

Another topic that AWS builds upon from the previous year is sustainability. Where in 2021 the major announcement was the addition of the sustainability pillar to the Well-Architected Framework, this year it is about concrete ways of having your workload consume less energy and also a promise to be water positive by 2023.

In the rest of this blog, we will dive deeper into these topics.

Building a future-proof data foundation

Besides the several announcements of new services like Amazon DataZone or enhancements of existing services like AWS Glue Data Quality, AWS has come to realize that education is necessary for us to make sure all the data we have available is put to work.

Instead of collecting dust inside our data warehouses, AWS wants to build a future-proof data foundation by educating current and future generations and providing them with the skills necessary to leverage all the information that is hidden in the vast mountains of data. They provide over 150 courses online courses, low-code, and no-code tools to enable people to become data literate.

Services that don’t incur the need for re-architecting your system or add technical debt when future requirements change are key characteristics of the announcements done regarding data-related services. One concrete example of this is easier integration of services to prevent unnecessary moving around of data like Amazon red shift auto-copy from s3.

Ongoing serverlessification

Related to this is the ongoing serverlessification of the AWS services. Originally serverless was used only for services that utilized a pay-as-you-go model, however, AWS seems to move away from this paradigm with the announcement of other ‘serverless’ services.

An example is Amazon OpenSearch Serverless. The serverless nature of this service is debatable since you already have a hefty bill of $700 for only enabling it. Not really pay-as-you-go anymore, is it? However, AWS seems to be serious about the serverlessification of the AWS services. Last re:Invent several enhancements of services were announced that provide a higher level of abstraction onto services to lower the barrier-of-entry (Amazon OpenSearch Serverless), make it more cost-efficient (efforts around a zero-ETL world), or remove the necessity of makeshift solutions to bend services to your business needs (Amazon EventBridge Pipes).

AWS is changing the meaning of serverless to ‘a service that entails a higher abstraction’ so that customers don’t have to be bothered with all kinds of technical stuff under the hood. More focus on adding value for customers, faster time to market, and less distraction caused by implementing and managing commodities.

A more efficient AWS builder

As we mentioned last year in our recap of re:Invent, AWS aims to improve business agility for their users by reducing complexity.

Looking at the announcements made during pre:Invent and re:Ivent it becomes clear that AWS still tries to remove as much of the undifferentiated heavy lifting done by builders on their platform. Every line of code can be considered a liability and if AWS can take care of a piece of (glue) code for us, I would always opt for that. As a developer or engineer you just want to get business value out to the customer as soon as possible and it clearly shows that AWS is listening to the developers using their platform. The announcements of Eventbridge pipes, the new filtering SNS payloads feature and more advanced filtering capabilities in Amazon Eventbridge are an example of that and will reduce the amount of code being written by developers while working on the AWS platform. This in turn will let the builders focus more on their actual business logic.

Another great example of this is a recent improvement in AWS Lambda. From our experience, a lot of enterprises are standardized on Java and/or .Net, and as more and more companies are migrating their workloads to the cloud we see that Java developers are also trying to adopt new compute models. We sometimes see some of these Java and .Net developers skip services like AWS Lambda and directly go running their application in containers due to the fact that Java-based applications suffer from slower start times a.k.a. ‘cold start’ issues. This is mainly due to the fact that Java is a statically compiled language and the JVM and popular frameworks like Spring require more memory and take seconds before being able to serve traffic compared to dynamic interpreted languages like Python or Javascript.

Within the Java ecosystem, ‘new’ frameworks like Quarkus and Micronaut aim to solve some of these issues and also be more lightweight. These frameworks tightly integrate with new innovations like GraalVM, which compiles the applications to native code, resulting in faster startup times and a much lower memory footprint for Java-based applications.

However, learning new frameworks like Quarkus, Micronaut or GraalVM takes time and it takes time away from delivering business value. To help builders spend more time on building on the platform instead of for the platform, AWS introduced AWS Lambda Snapstart. With Lambda Snapstart you can get up to 10x improvement on your Java Lambda cold start. SnapStart takes a memory snapshot of the state of the JVM after it has launched and initialized. Lambda Snapstart is a clear example of what can be done if you own the entire stack. It makes use of the snapshot functionality of the underlying FireCraker VM and a checkpoint and restore feature of the JVM.

One other change AWS seems to make is taking a suite approach in supporting builders during the entire software development lifecycle (SDLC), from feature request to deployment. Up until now, Amazon offered a lot of separate tools (CodeCommit, CodeBuild, CodePipeline, etc) that could be combined to support developers, but some important parts were missing and teams had to find those outside the AWS environment. We see that bigger enterprises have adopted suite-based solutions like (Microsoft) Github + Github Actions and Azure DevOps. With the announcement of Amazon CodeCatalyst that might be about to change for organizations working extensively with AWS. Amazon CodeCatalyst is a product offered outside of the well-known AWS Console as a standalone product. It allows you to integrate or connect to familiar products like JIRA and GitHub and the syntax for pipelines is based on the GitHub actions workflow model. This is an interesting move and we hope to see the product evolve over the next couple of years.

One Cloud for All

When you walk around re:Invent or even watch some of the sessions online you will notice the word “builder” come up a lot. Not engineers, not developers, but builders. With the maturity of the foundation of the AWS platform, one focus of AWS has been to lower the entry barrier for building solutions on AWS and increase the target audience to extend beyond the technical-apt.

We have seen this previously with tools like AWS CDK and AWS Amplify, where the complexity of AWS CloudFormation has been abstracted away behind a more user-friendly and user-specific layer. The latter is more prevalent when looking at AWS Amplify, which caters specifically to frontend engineers that want to build AWS solutions, allowing them to quickly spin up an AWS backend and easily hook it up to their frontend framework of choice without having to know too much about what is going on under the hood.

However, these tools seem to be only the first stop down this avenue that AWS is moving through. The steps AWS is making indicate a goal of getting everyone and their parents to be able to build AWS solutions. This strategy makes sense from a competitive point of view. Making AWS a household name where the creatives of the world can easily bring their ideas to life will introduce a new revenue stream previously blocked by the technology barrier.

Once building AWS solutions become “easy” enough, it will allow a new wave of disruptors to reach the market with a development speed and cost-effectiveness never seen before. And if they are going to change the world anyway, why not have it all run on the AWS infrastructure? Going this route will be a win-win for AWS and the innovators of tomorrow.

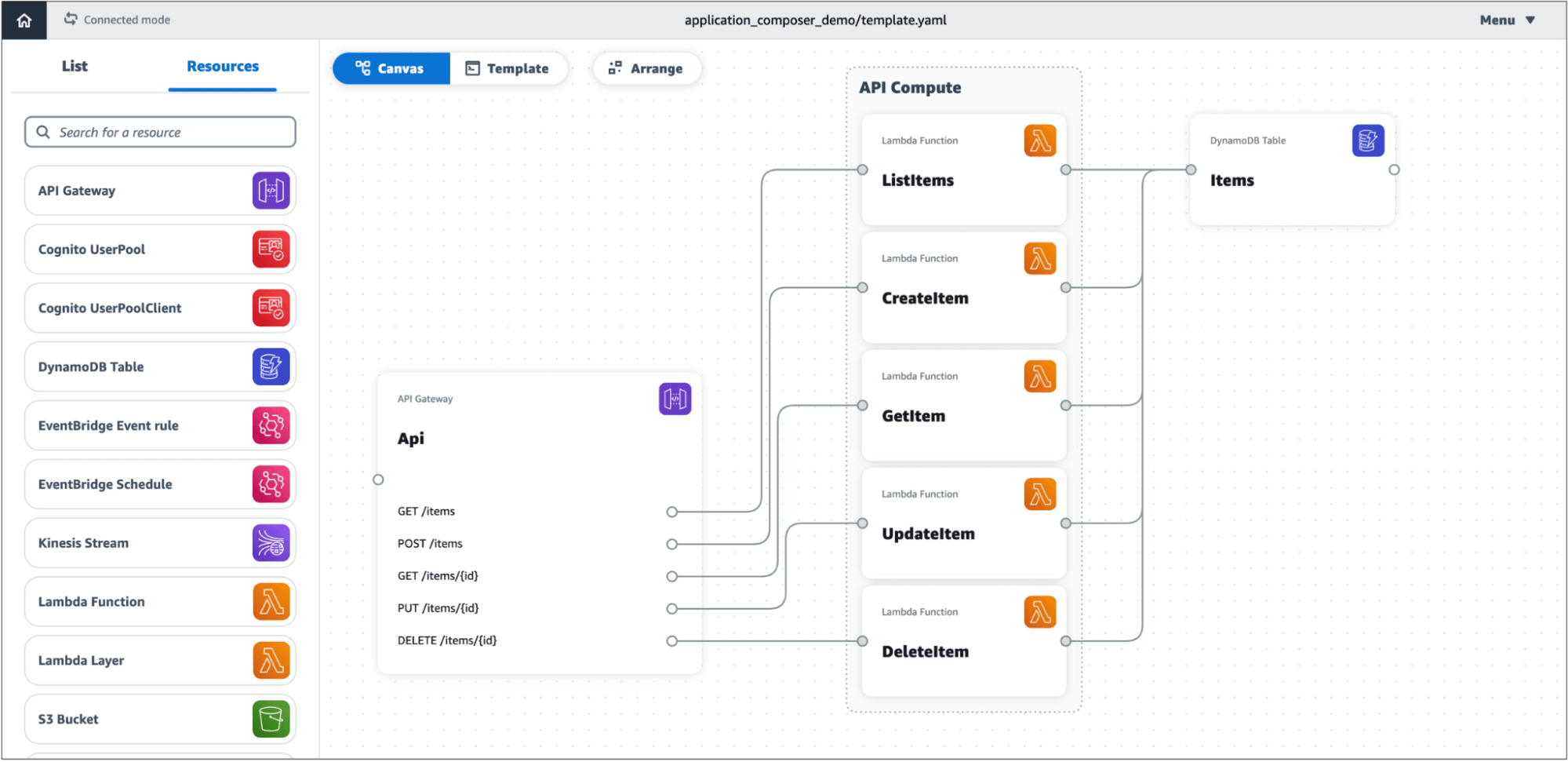

One of the new announcements this year at AWS re:Invent is the launch of AWS Application Composer, which allows you to visually design serverless applications, including APIs, background batch processing, and event-driven workloads. You can focus on what you want to build and let Application Composer handle the how. While this launch moves AWS closer to its world domination goals, because compared to CDK you don’t even need to code anymore, it still requires a knowledge of the building blocks to be able to create a working and useful solution. Nevertheless, this is a step in the right direction.

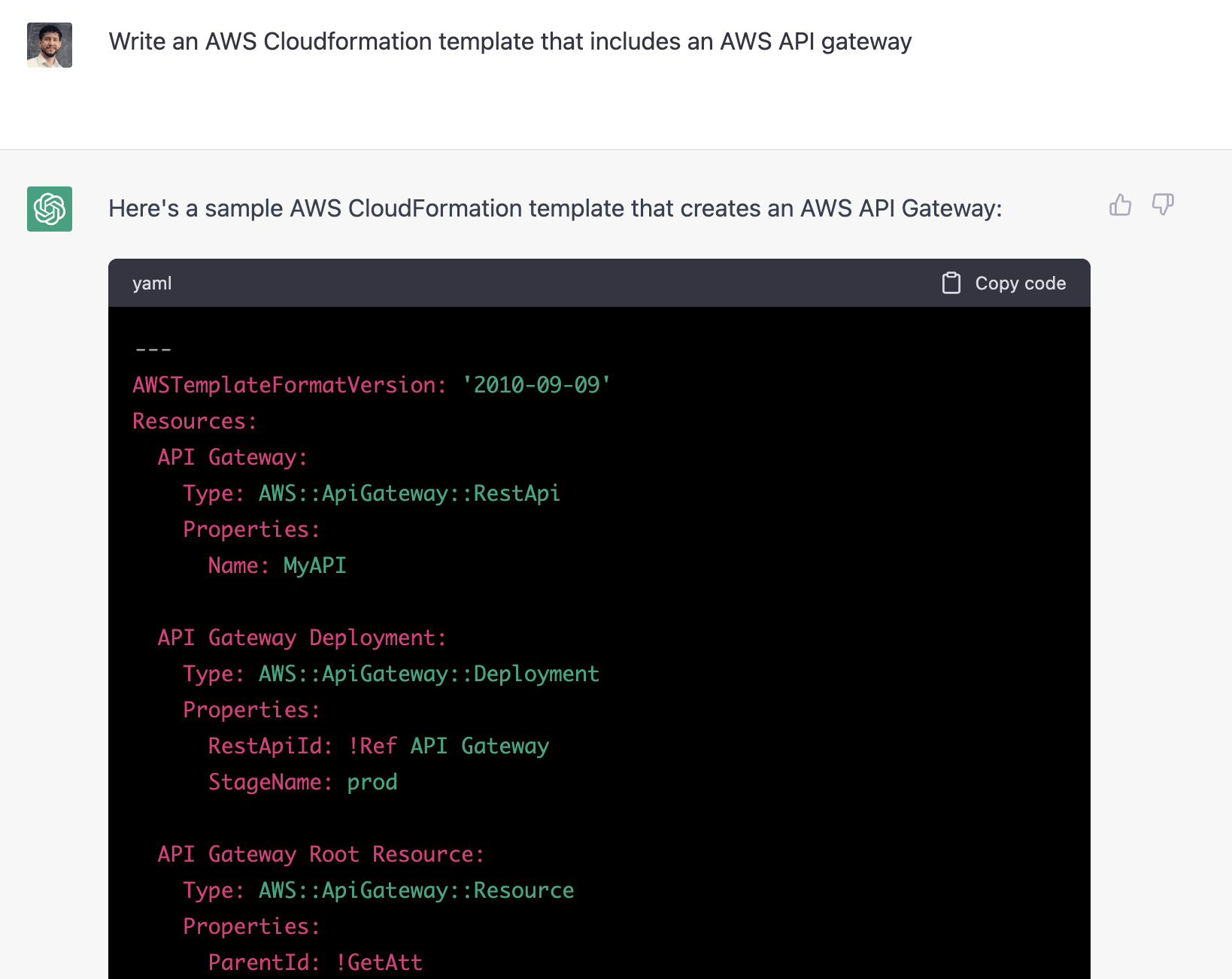

Another development in this area is the announcement of AWS Code Whisperer, which will allow users to generate code by simply writing something similar to “generate a function that uploads a file to s3 using server-side encryption”. No doubt an answer to GitHub’s (Microsoft) Co-Pilot, it brings AI into the mix to assist the AWS Builder in creating their solutions.

While Code Whisperer requires even more low-level knowledge than App Composer to get your solution running, the interesting property of Code Whisperer is the use of natural language as the human interface. We have seen what the combination of natural language and AI can do with the rise of ChatGPT. If this is the way people are going to “do things” moving forward, it would be in the best interest of AWS to jump on the bandwagon and provide a ChatGPT-like interface to build AWS solutions. We have no doubt this is already being looked at.

All cloud for one

AWS is not only targeting “everyone”, they are also targeting “you specifically”. AWS has released high-level services over the years that cater to very specific/vertical use cases. One example is AWS Connect, which was featured in one of the customer’s highlights during a keynote this year. AWS Connect is an AWS service specifically designed to improve customer service by adding a cloud contact center and using AI to improve agent efficiency.

A new service of this type announced this year is AWS Supply Chain, which is an AWS-managed service that, as the name implies, helps you manage your supply chain by leveraging AWS services and machine learning to provide visibility and help make decisions. Amazon as an e-commerce company has many years of experience in this domain, so it stands to reason that they have packaged many relevant best practices into this service. This is not only AWS providing a service, it is also them sharing their knowledge through this service.

Continuing this trend of offering functionality derived from their own experience, AWS has released AWS Ambit scenario designer, an open-source set of tools to create 3D content. AWS has been functioning as a game studio for the last 10 years. With the release of this toolset, this again focuses on a completely new set of builders.

What these developments aim to do is invite new builders to AWS that are trying to achieve very specific goals. AWS will offer them a user-friendly, end-to-end solution to do this, backed by the power of the AWS cloud. These managed services make it possible for users with minimal AWS knowledge to get things up and running for their vertical use cases. However, in our experience, as soon as you want to extend beyond what the managed service has to offer or if you should run into an error, a more advanced level of knowledge becomes necessary, where the gap in knowledge between the two scenarios is unexpectedly large. This is where the AWS expert builders come into play and why having a deeper level of AWS knowledge will be beneficial.

We expect AWS to continue this trend and announce even more of these vertical services related to their own expertise. One of their key areas of focus is sustainability. They have pledged to become “water-positive” by 2030. It wouldn’t surprise us to see a service appear down the line that allows you to receive actionable insights about your water and energy usage for your facilities, thus helping other companies also become more sustainable.

Being more sustainable

A topic that is hot and happening: Sustainability and future-proof computing. Something we cannot ignore, given the ongoing change of our climate and all the coverage it gets in the media.

This is not a new topic for AWS, as last year they released the Carbon footprint tool and introduced the sustainability pillar to name a few actions.

One of the causes of this climate change can be attributed to our energy hunger and seemingly lack of efficiency in producing and consuming that energy. And let’s be fair: Our electronics have become more and more efficient over the last decades, but our demand also grew exponentially. The computing power of my iPhone has increased significantly compared to one from 2013, while my battery doesn’t last any longer. While efforts to increase power efficiency remain, we don’t back off on the demand of workloads.

This re:Invent AWS announced several improvements in performance and efficiency: Smaller-sized hardware or compute resources performing the same workload, which results in less energy consumption.

AWS’ driver to being more sustainable seems uncertain, however: it could be caused by laws or the expectation that laws regarding sustainability will be created in the near future, or it’s just marketing (greenwashing). Regardless of the reason that drives them, AWS seems to be serious about this, because of the number of announcements that have some relation with sustainability.

Managed services increase the sustainability of your cloud workloads

AWS is heavily advising customers to use managed services over EC2 instances. Besides the fact that managed services increase business agility and make experimentation easier and cheaper, it also contributes to more sustainable workloads: AWS has a strong driver to increase the efficiency of their services to be able to handle more workloads on equal or less hardware. So, designing and building your workloads in AWS using managed services automatically contributes to a more sustainable architecture. This is nicely pointed out in the shared responsibility model of the sustainability pillar in the well-architected framework.

Zero ETL

In this keynote, Adam Selipsky promised a ‘zero ETL’ future. ETL stands for ‘Extract, Transform, and Load’ and is a data pipeline that is applied to almost all data warehouse ingestion flows. This seems a somewhat technical announcement, but don’t be mistaken: Data pipelines are subject to require a lot of compute, let alone the labor to design and implement them, to get the data into shape before adding them into the destination data warehouse. So, a zero ETL future would be great. This re:Invent a new integration was announced that should be the premise of a zero ETL future: A managed way to ingest data from Aurora into Redshift, without the need for a separate data pipeline to setup and maintain.

When you listen carefully to the keynotes of this reinvent, one can discover that a lot of efforts have been made to let people think that AWS is boarding the sustainability train, full throttle. It seems that everything they develop has somehow to do with improved efficiency, doing more with equal or fewer resources, and higher-level abstractions in the form of managed services.

As far as we are concerned this is a strategy AWS may double down on since it will be a win-win outcome.

Take advantage of the future

To stay relevant is getting more and more important for businesses now that the cloud seems to be maturing and cloud vendors like AWS are starting to pivot into developing verticals. Before you know it AWS has taken over your business because it demoted your core business to commodity. There is no denying anymore that the cloud is hype and will pass when you look at the economic size of the cloud platform. Rather sooner than later It will look like AWS will surpass the $100 billion in revenue mark this year. So, get on that train if you haven’t done so, and learn to leverage everything the cloud has to offer to speed up your business innovation to stay relevant and bring value to your customers! And if so, we’d love to help!

Meer weten over wat wij doen?

We denken graag met je mee. Stuur ons een bericht.