Automate customer interaction using OpenAI Assistants

Almost everybody knows what ChatGPT is. At workshops I give, about 90% of the people have used ChatGPT. Most of them know about the company, but only some know about Assistants. That is a pity; assistants can give you or your users a better experience. After reading this blog, you understand what OpenAI Assistants are, how they work and what they can do for you and your users.

DALL E generated coffee bar inspired by the famous Starbucks

The use case we use for the demo is a coffee-ordering application. Using the chat application, you talk to the barista, ask for suggestions, and order a nice cup of coffee or something else if you do not like coffee. The demo shows how to work with the different aspects of OpenAI assistants. It shows how to use functions and retrievers. It also shows how to combine it with the hybrid search of Weaviate to find recommended products and verify if the product you want is available in the shop.

Understanding of OpenAI Assistants

An assistant is there to help your users interact with a set of tools using natural language. An assistant is configured with instructions and can access an LLM and a set of tools. The provider, OpenAI, provides some of these tools. Other tools are functions that you provide yourself. This might sound abstract. Let’s have a look at an example. One of the provided tools is a code interpreter. The assistant uses this tool to execute generated Python code. Using this tool overcomes one of the well-known problems with doing calculations.

- Instructions: You are a personal math tutor. Write and run code to answer math questions.

- tools: code_interpreter

- model: gpt-4-turbo-preview

That is enough to initialise an assistant. You provide access to the assistant using a Thread. Think of a Thread as the chat window. You and the assistant both add messages to the Thread. After adding a message to the Thread, you push the run button to start the interaction with the assistant.

The following section introduces the assistant we are creating during this blog post.

The coffee-ordering assistant

I like, or better need, a nice cup of coffee every day, multiple times. I am a black coffee fan. But these hip coffee bars have so many choices. For some people, it is hard to choose the proper coffee. Therefore, we create a coffee assistant that can help you make a choice and assist you during the ordering process.

Have yourself a nice cup of coffee.

First, we give the assistant our instructions.

You are a barista in a coffee shop. You help users choose the products the shop has to offer. You have tools available to help you with this task. There are tools to find available products, add products, give suggestions based on ingredients, and finalise the order. You are also allowed to do small talk with the visitors.

We provide the assistant with the following tools:

- find_available_products — Finds available products based on the given input. The result is an array with valid product names or an empty array if no products are found.

- start_order — Start an order, and the result is ERROR or OK. You can use this to notify the user.

- add_product_to_order — Add a product to the order. The result is ERROR or OK. You can use this to inform the user

- remove_product_from_order — Remove a product from the order. The result is ERROR or OK. You can use this to notify the user

- checkout_order — check out the order. The result is ERROR or OK. You can use this to notify the user

- suggest_product — Suggests a product based on the input. The result is the name of the product that best matches the input.

The description of the tool or function is essential. The assistant uses the description to determine what tool to use and when.

The video below gives you an impression of what we will build.

The code

The first component for an OpenAI assistant is the Assistant class. I am not rewriting the complete OpenAI documentation here. I do point out the essential parts. The assistant is the component that interacts with the LLM, and it knows the available tools.

The assistant can be loaded from OpenAI. No get or load function accepts a name. Therefore, we have a method that loops over the available assistants until it finds the one with the provided name. When creating or loading an assistant, you have to provide the tools_module_name. This is used to locate the tools that the assistant can use. It is essential to keep the tools definitions at the exact location so we can automatically call them. More on this feature when talking about runs.

We create the coffee assistant using the code below:

def create_assistant():

name = "Coffee Assistant"

instructions = ("You are a barista in a coffee shop. You"

"help users choose the products the shop"

"has to offer. You have tools available"

"to help you with this task. You can"

"answer questions of visitors, you should"

"answer with short answers. You can ask"

"questions to the visitor if you need more"

"information. more ...")

return Assistant.create_assistant(

client=client,

name=name,

instructions=instructions,

tools_module_name="openai_assistant.coffee.tools")

Notice that we created our own Assistant class, not to confuse it with the OpenAI Assistant class. It is a wrapper for the interactions with the OpenAI assistant class. Below is the method to store function tools in the assistant.

def add_tools_to_assistant(assistant: Assistant):

assistant.register_functions(

[

def_find_available_products,

def_start_order,

def_add_product_to_order,

def_checkout_order,

def_remove_product_from_order,

def_suggest_coffee_based_on_description

])

We have to create the assistant only once, the next time we can load the assistant to use it for interactions. The next code block shows how to load the assistant.

try:

assistant = Assistant.load_assistant_by_name(

client=client,

name="Coffee Assistant",

tools_module_name="openai_assistant.coffee.tools")

logging.info(f"Tools: {assistant.available_tools}")

except AssistantNotFoundError as exp:

logging.error(f"Assistant not found: {exp}")

raise exp

Look at the complete code for the Assistant class at this location.

Threads

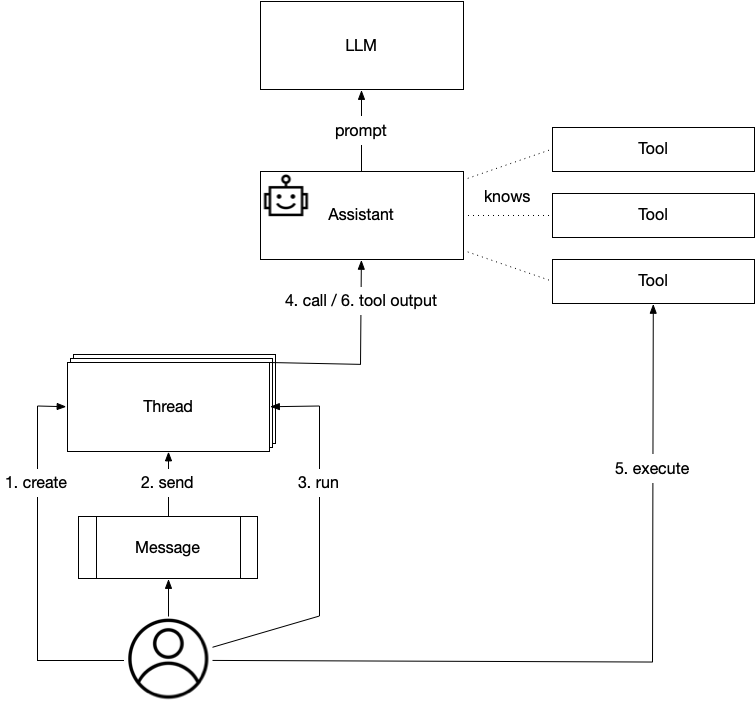

The thread is an interaction between a user and the assistant. Therefore, a Thread object is unique per user. In the application, we use the streamlid session to store the thread_id. Therefore, each new session means a new Thread. The thread is responsible for accepting messages and sending them to the assistant. After a message is sent, a response message is awaited. Each interaction with an assistant is done using a run. The image below presents the flow of the application using these different components.

It is essential to understand that our Assistant wraps the OpenAI Assistant. Calling the tools is done using our Assistant. Detecting the difference between an output with an answer and an output with the request to call a tool is done using the status of the run. If the status is requires_action, our Assistant finds the tool_calls and calls the tools. This is what happens in the following code block taken from the thread.py.

def __handle_run(self, run: Run) -> Run:

run = self.__verify_run(run_id=run.id)

while run.status == "requires_action":

logger_thread.debug(f"Run {run.id} requires action")

tools_calls = run.required_action.submit_tool_outputs.tool_calls

tool_outputs = []

for tool_call in tools_calls:

result = self.assistant.call_tool(

tool_call.function.name,

json.loads(tool_call.function.arguments))

logger_thread.debug(f"Result of call: {result}")

tool_outputs.append({

"tool_call_id": tool_call.id,

"output": result

})

run = self.client.beta.threads.runs.submit_tool_outputs(

run_id=run.id,

thread_id=self.thread_id,

tool_outputs=tool_outputs

)

run = self.__verify_run(run_id=run.id)

logger_thread.info(f"Handle run {run.id} completed.")

return run

def __verify_run(self, run_id: str):

"""

Verify the status of the run, if it is still in

progress, wait for a second and try again.

:param run_id: identifier of the run

:return: the run

"""

run = self.client.beta.threads.runs.retrieve(

run_id=run_id, thread_id=self.thread_id)

logger_thread.debug(f"Run: {run.id}, status: {run.status}")

if run.status not in ["in_progress", "queued"]:

return run

time.sleep(1)

return self.__verify_run(run_id=run.id)Notice how we use the __verify_run function to check the status of the run. If the run is queued or in_progress, we wait for it to finish.

The source code for the thread can be found at this location.

Tools

We already mentioned the tools that the assistant can use. We have to provide the description of the tool to the Assistant. The following code block shows the specification for one function.

def_suggest_coffee_based_on_description = {

"name": "suggest_coffee_based_on_description",

"description": ("Suggests a product based on the given "

"ingredients. The result is a valid product "

"name or an empty string if no products

"are found."),

"parameters": {

"type": "object",

"properties": {

"input": {

"type": "string",

"description": "The coffee to suggest a coffee for"

}

},

"required": ["input"]

}

}

In the code block you see the name, this is important to us. We use the name to call the function. Therefore the name of the function needs to be the same as specified here. The description is really important to the LLM to understand what the tools brings. The parameters are the values provided by the LLM to call the tool with. Again, the description is really important for the LLM to understand what values to provide.

In this example we use Weaviate to recommend a drink using the provided text. The next code block shows the implementation.

def suggest_coffee_based_on_description(input: str):

weaviate = AccessWeaviate(

url=os.getenv("WEAVIATE_URL"),

access_key=os.getenv("WEAVIATE_ACCESS_KEY"),

openai_api_key=os.getenv("OPENAI_API_KEY"))

result = weaviate.query_collection(

question=input, collection_name="coffee")

weaviate.close()

if len(result.objects) == 0:

logger_coffee.warning("No products found")

return ""

return result.objects[0].properties["name"]

Concluding

This blog post intends to give you an idea of what it means to work with Assistants. Check the code repository if you want to try it out yourself. The readme file contains the order in which you have to run the different scripts. One is to create the Assistant, one is to load the data into Weaviate, and one is to run the sample application.

Hope you like the post, feel free to comment or get in touch if you have questions.

References

- https://platform.openai.com/docs/assistants/overview

- https://github.com/jettro/ai-assistant

Meer weten over wat wij doen?

We denken graag met je mee. Stuur ons een bericht.