Deploying a secure AWS Elasticsearch cluster using cdk

If there is one thing that I have seen a lot with elasticsearch clusters in the wild, it is problems with security. The out of the box Elasticsearch installation does not have a user_id password protection like most databases do. Using the basic license these days does give you the option, but you still have to configure it yourself. When AWS introduced their AWS Elasticsearch service, it did not configure security out of the box. Therefore Elasticsearch clusters have been famous for exposed data. Check an article like this, but there are lots more. Does this mean Elasticsearch should not be used? No, but you must know what you are doing.

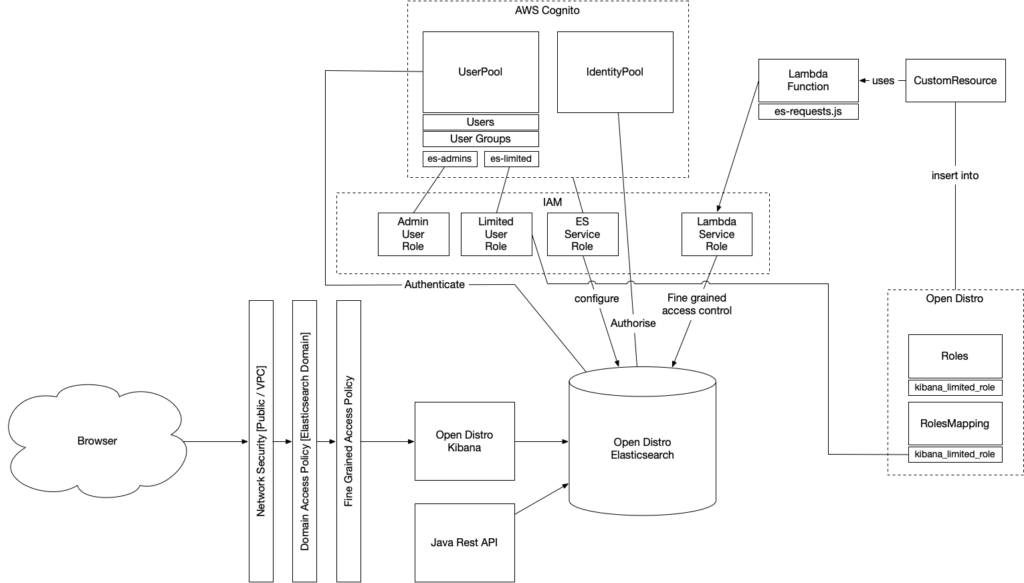

When in need of an elasticsearch cluster, you can do everything yourself. You can read more about the security options here. Another approach would be to use a cloud service. As the title mentions, in this blog, I will discuss how you can create a secure cluster with Amazon AWS. When creating a cluster, the default approach from AWS is to allow VPC access; when exposing your cluster to the public, you should at least limit usage through certain IP Addresses or users. AWS uses Open Distro, which comes with fine-grained access control. There is an option to secure certain indexes, documents and even fields. Roles within Open Distro can be combined with back end roles coming from IAM. Using Cognito, users and user groups can be managed from AWS.

Creating a fully configured secure AWS Elasticsearch cluster with Kibana using Cognito is doable using the AWS console. A blog post by the AWS team already gives a good overview and an example: “Launch Amazon Elasticsearch Service …“. Still, I wanted to use the latest and greatest AWS CDK to create an automated deployment. I also want to give you more information about the different components you need to fully secure your AWS Elasticsearch cluster.

What are we creating?

We need an Elasticsearch cluster that is exposed through Kibana. By using a username/password combination, the Kibana environment is secured. Users are divided into two groups, admins and limited users. Limited users can still log in to Kibana but have only read access to specific indexes. Admins can do everything they want through Kibana. Besides access through Kibana, we also have a Java application that exposes an API that needs access.

Below you can find a schematic overview of the solution:

As you can see, there is more to it than just a simple installation of elasticsearch. When using the AWS Console, you can configure the same solution as we need; that does not make it repeatable, though. Let us break down into the different components:

- Browser – This is used to connect to the Kibana GUI to interact with the Elasticsearch cluster data.

- AWS Infrastructure – This is used to deliver a highly scalable and secure environment for keeping and presenting your data.

- AWS Elasticsearch – An Elasticsearch (Open Distro) cluster accessible using Kibana. Comes out of the box with lots of features like logging, fine-grained security, backups, upgrades and all, of course, highly scalable.

- AWS Cognito – This is used to provide authentication of users and authorisation of requests. The user pool comes with an internal user and group collection. The identity pool can make use of the user pool but also of numerous other OpenId providers. Cognito is used to provide role-based authorization to Elasticsearch.

- AWS IAM – Is used by most components to enable security. It provides roles and service delegates, policies, all to make the security wishes of out components possible.

- AWS Lambda – These are used to execute calls to elasticsearch to configure open distro.

The sample code

You can find the sample code in this Github repository. The sample is based for the biggest part on a sample provided by AWS. When creating a project for yourself you can use the CDK init mechanism and we need to install a few additional dependencies.

cdk init app --language typescript npm install @aws-cdk/aws-custom-resources npm install @aws-cdk/aws-elasticsearch npm install @aws-cdk/aws-lambda npm install @aws-cdk/aws-cognito npm install @aws-cdk/aws-iam

The code repository contains the configuration of the stack. Check the file aws-es-CDK-stack.ts. The first step is configuring the user and the identity pool. The user pool has a lot of options. The sample code shows how to change the templates for the SMS and email messages used to communicate around new accounts. You can also change the logo’s and colours to create login pages that match your corporate style. Do notice that we add the cognitoDomain to the user pool. The identity pool is basic in the beginning. However, we need to add a lot more configuration later when we have the required roles available.

private createUserPool(applicationPrefix: string) {

const userPool = new UserPool(this, applicationPrefix + 'UserPool', {

userPoolName: applicationPrefix + ' User Pool',

userInvitation: {

emailSubject: 'With this account you can use Kibana',

emailBody: 'Hello {username}, you have been invited to join our awesome app! Your temporary password is {####}',

smsMessage: 'Hi {username}, your temporary password for our awesome app is {####}',

},

signInAliases: {

username: true,

email: true,

},

autoVerify: {

email: true,

}

});

userPool.addDomain('cognitoDomain', {

cognitoDomain: {

domainPrefix: applicationPrefix

}

});

return userPool;

}

private createIdentityPool(applicationPrefix: string) {

return new CfnIdentityPool(this, applicationPrefix + "IdentityPool", {

allowUnauthenticatedIdentities: false,

cognitoIdentityProviders: []

});

}The next step is creating the 4 roles that we need. We have two different roles for user groups—one for admin users and one for limited users. We also have two different service roles. One used to configure Cognito in the elasticsearch cluster and one to execute lambdas. The function below configures user roles.

private createUserRole(idPool: CfnIdentityPool, identifier: string) {

return new Role(this, identifier, {

assumedBy: new FederatedPrincipal('cognito-identity.amazonaws.com', {

"StringEquals": {"cognito-identity.amazonaws.com:aud": idPool.ref},

"ForAnyValue:StringLike": {

"cognito-identity.amazonaws.com:amr": "authenticated"

}

}, "sts:AssumeRoleWithWebIdentity")

});

}

We create a FederatedPrincipal and use 2 conditions to assign the role to a logged-in user or not. If properties taken from the authenticated token claim match the conditions, the user can assume the role. In this case, we assume a token coming from a Cognito authentication and check that our own identity pool authenticates the user. More info on this topic here. The code for creating a service role is a bit shorter.

private createServiceRole(identifier: string, servicePrincipal: string, policyName: string) {

return new Role(this, identifier, {

assumedBy: new ServicePrincipal(servicePrincipal),

managedPolicies: [ManagedPolicy.fromAwsManagedPolicyName(policyName)]

});

}

Important here is the service principal to give access to and the policy to apply. The role to configure Cognito in Elasticsearch needs the policy AmazonESCognitoAccess to interact with the service es.amazonaws.com.

We can create our user groups with two user roles—one for admins and one for limited users. The next code block shows how to create the admin user group. Notice that we refer to the user pool, the admin role through its arn. And we give it a name.

private createAdminUserGroup(userPoolId: string, adminUserRoleArn: string) {

new CfnUserPoolGroup(this, "userPoolAdminGroupPool", {

userPoolId: userPoolId,

groupName: "es-admins",

roleArn: adminUserRoleArn

});

}

The next step is what we have all been waiting for, creating the AWS Elasticsearch domain. There is a lot that comes together when in this step. We need a reference to the identity and user pool to configure Kibana and Cognito connection. We need the service role that is allowed to configure Elasticsearch for Cognito. We need the lambda service role to configure the fine-grained access in elasticsearch. We can configure the number of nodes, dedicated master nodes, sizing of the nodes, enabling logging, and encryption, using Cognito for Kibana and fine-grained access control. In the final bit, we configure that the limited user role and the lambda service role should have HTTP access to the domain.

private createESDomain(domainName: string,

idPool: CfnIdentityPool,

esServiceRole: Role,

esLimitedUserRole: Role,

lambdaServiceRole: Role,

userPool: UserPool) {

const domainArn = "arn:aws:es:" + this.region + ":" + this.account + ":domain/" + domainName + "/*"

const domain = new es.Domain(this, 'Domain', {

version: es.ElasticsearchVersion.V7_9,

domainName: domainName,

enableVersionUpgrade: true,

capacity: {

dataNodes: 1,

dataNodeInstanceType: "r5.large.elasticsearch",

},

ebs: {

volumeSize: 10

},

logging: {

appLogEnabled: false,

slowSearchLogEnabled: false,

slowIndexLogEnabled: false,

},

nodeToNodeEncryption: true,

encryptionAtRest: {

enabled: true

},

enforceHttps: true,

accessPolicies: [new PolicyStatement({

effect: Effect.ALLOW,

actions: ["es:ESHttp*"],

principals: [new AnyPrincipal()],

resources: [domainArn],

})

],

cognitoKibanaAuth: {

identityPoolId: idPool.ref,

role: esServiceRole,

userPoolId: userPool.userPoolId

},

fineGrainedAccessControl: {

masterUserArn: lambdaServiceRole.roleArn

}

});

new ManagedPolicy(this, 'limitedUserPolicy', {

roles: [esLimitedUserRole, lambdaServiceRole],

statements: [

new PolicyStatement({

effect: Effect.ALLOW,

resources: [domainArn],

actions: ['es:ESHttp*']

})

]

})

return domain;

}

The last bit is configuring Open Distro security. In the sample, I scratch the service of what is possible. But the mechanism should be easy to use to add more rules. The mechanism is to create a lambda that interacts with elasticsearch. The lambda is read from a file added to the repo, which is a shameless copy from the mentioned AWS sample. Notice we supply the lambda with the elasticsearch domain endpoint, the lamda service role and the current region. Then we use a custom resource to use a provider to send messages to. The event handler of the provider calls the lambda with the provided message. The send messages contain an HTTP method, a path and a body. The fine-grained access control starts with roles as well. Beware, these are not the same as the IAM roles we talked about earlier. In the role mapping, we map the fine-grained access control role to an IAM role, called the backend role. The next and final code block shows the lambda, the provider and the custom resource. I did remove some of the requests as the code block would go on and on. Check the git repo for the complete repo.

private executeOpenDistroConfiguration(lambdaServiceRole: Role, esDomain: Domain, esAdminUserRole: Role, esLimitedUserRole: Role) {

/**

* Function implementing the requests to Amazon Elasticsearch Service

* for the custom resource.

*/

const esRequestsFn = new lambda.Function(this, 'esRequestsFn', {

runtime: lambda.Runtime.NODEJS_10_X,

handler: 'es-requests.handler',

code: lambda.Code.fromAsset(path.join(__dirname, '..', 'functions/es-requests')),

timeout: Duration.seconds(30),

role: lambdaServiceRole,

environment: {

"DOMAIN": esDomain.domainEndpoint,

"REGION": this.region

}

});

const esRequestProvider = new Provider(this, 'esRequestProvider', {

onEventHandler: esRequestsFn

});

new CustomResource(this, 'esRequestsResource', {

serviceToken: esRequestProvider.serviceToken,

properties: {

requests: [

{

"method": "PUT",

"path": "_opendistro/_security/api/roles/kibana_limited_role",

"body": {

"cluster_permissions": [

"cluster_composite_ops",

"indices_monitor"

],

"index_permissions": [{

"index_patterns": [

"test*"

],

"dls": "",

"fls": [],

"masked_fields": [],

"allowed_actions": [

"read"

]

}],

"tenant_permissions": [{

"tenant_patterns": [

"global"

],

"allowed_actions": [

"kibana_all_read"

]

}]

}

},

{

"method": "PUT",

"path": "_opendistro/_security/api/rolesmapping/kibana_limited_role",

"body": {

"backend_roles": [

esLimitedUserRole.roleArn

],

"hosts": [],

"users": []

}

}

]

}

});

}

Oh, what a journey it has been. Finally, time to just run the deploy, create the admin and limited users and start using the elasticsearch cluster.

If you have questions, don’t hesitate to contact me. If you don’t want to create and maintain your own cluster, you can contact me as well. If you have a question about the best way to use Elasticsearch, monitor your search performance, learn about returning relevance results, you guessed it, you can contact me as well.

Want to know more about what we do?

We are your dedicated partner. Reach out to us.