The Evolution of AWS from a Cloud-Native Development Perspective: Serverless, Event-Driven, Developer-Friendly, Sustainable

Recent AWS announcements feature fewer service additions and instead underline the efforts the cloud giant is undertaking to increase the strength of its massive portfolio of building blocks. AWS CEO Adam Selipsky has mapped out a course that intensifies AWS’s focus on serverless technologies, event-driven architectures, an improved developer experience, and sustainability.

In this blog post, we outline the direction cloud-native engineering is heading in, how you can learn to leverage AWS’s portfolio of managed services and primitives to your advantage, and how cloud technology is reshaping the field of software engineering.

If you prefer watching a video, we got you covered! We recently broadcasted a cloud-native development focussed AWS re:Invent re:Cap featuring most of this article’s themes. You can find the recording at the end of this post.

What AWS is telling us

With so many services in its portfolio already, this year’s AWS re:Invent didn’t hold any massively surprising announcements. With the yearly conference reaching its 10-year milestone — and AWS being around for 15 years — the AWS platform is maturing while at the same time evolving to meet market demands.

AWS leadership’s re:Invent keynotes

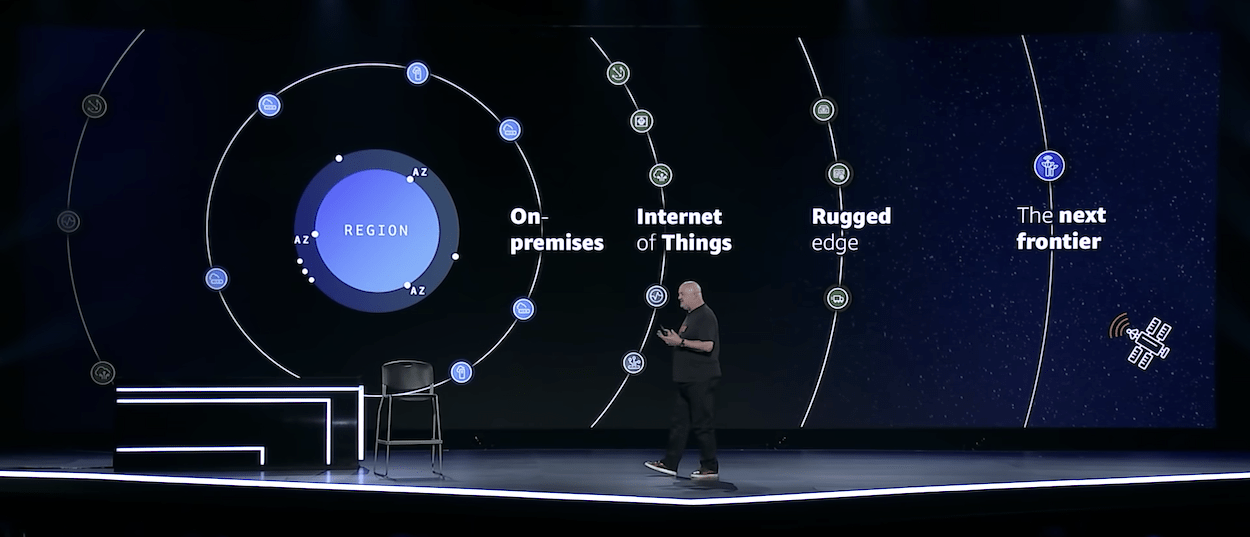

Freshly at the helm of AWS, Adam Selipsky held his first re:Invent Keynote. Selipsky’s primary message: AWS is shifting from merely offering infrastructure primitives to embracing the idea of being a so-called platform of platforms. Therefore, AWS’s offerings will increasingly become an interesting piece of organizational value chains.

While Amazon CTO Werner Vogels took the stage after an introduction referencing Fear and Loathing in Las Vegas, his keynote wasn’t as spectacular. Dr. Vogels took the time to make a case for Well-Architected applications, which we interpret as a good signal for builders. Other highlights were the release of Construct Hub and the CDK Patterns library.

Primitives, not frameworks

An important theme these last few months was the expansion and refinement of AWS as a cloud-native platform. You can view AWS as a data center containing compute resources and seemingly limitless storage at the other end of the internet, or maybe as a big bunch of infrastructural primitives. The way we look at the platform is by grouping its services into three categories:

- Infrastructural primitives: storage, networking, and compute (a.k.a. Infrastructure as a Service, IaaS).

- Middleware-oriented services: web servers, container orchestrators, databases, message queues (a.k.a. Platform as a Service, PaaS).

- Serverless: take what you need, pay-as-you-go.

Building something on a platform containing 200+ services might seem a bit overwhelming. Therefore, from the perspective of cloud-native development, we use this rule of thumb: start building from the most modern collection of services first and, if needed, complement the solution using more primitive building blocks.

Frequently, you will end up with a serverless solution that is rapidly and almost infinitely scalable and highly cost-effective. However, sometimes there are missing pieces of the puzzle, and you can’t complete it with serverless services. We can then turn to platform primitives or infrastructure services like containers, RDS, virtual machines, or even complete SaaS solutions. Don’t forget to update your solutions from time to time, as the platform never stops evolving.

Reduce risk, improve performance, grow revenue, increase efficiency

Effectively adopting the cloud means making the cloud work for your money. It’s not just a virtualized data center, or at least: that’s not the mindset that enables you to accelerate your organization towards its goals. Cloud-native development requires understanding cloud-native best practices in terms of performance, resiliency, cost optimization, and security. Luckily, AWS keeps investing in adoption frameworks, its Well-Architected Framework, and certification and training programs.

The ongoing evolution of serverless: commoditize all the things

You can immediately tell the serverless category from above is something special. Its services allow you to focus on functional value without worrying about what is needed to run it. You can compare a serverless service to a commodity, like electricity: you use it anytime you need it, without much second thought. For a while, serverless was synonymous with functions (FaaS) or Lambda, but its reach rapidly extends beyond compute to data stores and application integration.

Serverless technology keeps gaining traction, as we can see in its continuous evolution. Let’s look at AWS’s current serverless portfolio and see how it evolves.

Three categories of building blocks

Roughly speaking, we can divide AWS’s serverless building blocks into three categories:

- Compute: running code without provisioning servers (Lambda, Fargate).

- Data Storage: storing objects, documents, relational and other data (Simple Storage Service (S3), DynamoDB, Relational Database Service Proxy, Aurora Serverless).

- (Application) integration: EventBridge, Step Functions, Simple Queue Service (SQS), Simple Notification Service (SNS), API Gateway, AppSync.

Combining these building blocks, you can quickly create valuable solutions at scale.

Serverless expansion areas

While SaaS is powerful and often offers excellent value for money, it has significant constraints in terms of flexibility. To win in the marketplace, we need more flexibility for our differentiating technology. These are the lines along which serverless is evolving. Its journey started at compute, but the serverless philosophy quickly moved into other AWS categories.

Big data and streaming

Amazon MSK will get a serverless counterpart, which is great news for organizations running Kafka workloads. The same goes for AWS’s big data platform Amazon EMR and its data warehouse offering Amazon Redshift. Amazon Kinesis is also evolving its data streaming service, exemplified by the new Data Streams On-Demand feature. Last but certainly not least: AWS Data Exchange for APIs makes it a breeze to use third-party data while leveraging AWS-native authentication and governance.

Application integration

Apart from getting extra ephemeral storage, AWS Lambda now supports partial batch responses for SQS and event source filtering for Kinesis, DynamoDB, and SQS. EventBridge added Amazon S3 Notifications. On the surface, that might sound like a small change. But as Matt Coulter puts it: code is a liability, not an asset. Anything you can push to the cloud vendor gains you some security and an increased ability to focus on value. You can now control Amazon Athena workflows using AWS Step Functions in that same vein.

Are applications, infrastructure, and integration separable?

Now that serverless is moving beyond compute into the data and integration layers, we might ask ourselves: are applications, infrastructure, and integration separable? Is programming still mostly about functions and logic, and where does it bleed into the domain of operations? The advent of serverless is pushing boundaries everywhere and blurring previously clear and stable lines.

That’s excellent news, in our opinion: the more control and flexibility our teams have, the faster we can innovate. Gregor Hohpe, an Enterprise Strategist at AWS, says: “I firmly believe that we are just starting to realize the true potential of cloud automation. Modern cloud automation isn’t just about reducing toil. (…) it can help us blur the line between application and automation code.” We agree wholeheartedly.

The future (of cloud) is event-driven

Another direction cloud technology developments are pointing towards is that of event-driven architectures. Not only is the cloud itself highly event-driven, but applications built on cloud platforms also tend to be fitting most naturally when using the power of events. Why is that?

Agility in architecture

Market forces are driving organizations to become more agile. As Jeff Bezos puts it: “The only sustainable advantage you can have over others is agility, that’s it.” Organizations must learn to leverage technology to respond to and drive change to fulfill this business requirement. Event-driven architectures present the necessary characteristics:

- Loosely coupled: heterogeneous services and share information effectively while hiding implementation details.

- Scalable: multiple types of consumers can work in parallel, massively if needed.

- Independent: evolution, scaling, and failing can happen in isolation, especially when using buffers like event routers.

- Auditable: if events are routed and stored centrally, we can quickly implement auditing, policy enforcement, and information access control.

- Frugal: if we push changes when available, we don’t need to waste resources continuously polling for changes.

Event-driven systems enable us to focus on system behavior and temporality within a business domain, freeing us from the chains of rigid structures incapable of dealing with changing needs and requirements. There is a trade-off (of course): agile systems are complex and thus force us to learn to manage — seeming, but not actual — chaos.

AWS building blocks for event-driven architectures

Now, how is AWS helping us compose these systems? Whether you are building event-sourced systems, leverage CQRS, or are just notifying components, AWS has the building blocks you need:

- Producers: most AWS primitives, especially serverless ones, produce events. For example, we can feed Lambda with events from API Gateway, CloudWatch, DynamoDB, S3, Kinesis, and many more services. Besides that, we can use custom apps, SaaS solutions, and microservices as event producers.

- Consumers: notable consumers are AWS Lambda, Amazon SQS, Amazon Kinesis Data Firehose, and Amazon SNS. We can also use APIs from SaaS offerings and custom apps as event consumers.

- Routers: we can fulfill most integration needs using Amazon EventBridge and Amazon SNS.

- Well-Architected: the AWS Well-Achitected Framework describes best practices, which we can assess and monitor using the AWS Well-Architected Tool.

Combining these AWS services and using them to integrate SaaS and self-built products gives us the leverage we miss when only using off-the-shelf products.

How are event-driven cloud architectures evolving?

The answer to this question is short and straightforward: along the same lines as serverless. Last December, AWS mostly announced incremental improvements. But they quickly add up, accumulating in an ever-more powerful platform to build and evolve our solutions on. And the announcements don’t stop when re:Invent is done: AWS is working to improve its event-driven primitives year-round.

Amazon Eventbridge is an excellent example of this continuous investment. It was introduced in mid-2019 and has gained a lot of features since then: a schema registry, archiving and replaying of events, and a long list of event destinations. Its evolving event pattern matching, filtering, and routing options embody the ‘code is liability’ philosophy, enabling us to focus on value delivery. Recently, S3 Event Notifications were added to EventBridge’s features, giving us more direct, reliable, and developer-friendly options when responding to S3 object changes. For the web-scale organizations among us, AWS introduced cross-region event routing.

We mentioned Lambda’s new message filtering features above but want to highlight them again since they underline AWS’s continued investment in this area. Lastly, AWS IoT TwinMaker deserves some attention: it utilizes events as data sources and enables developers to create digital twins of real-world systems, which is nothing less than fantastic.

Improving the developer experience

AWS provides us with many building blocks, but using them effectively is not straightforward. Luckily for us, AWS seems to understand this problem and is steadily improving in that area.

Programmable everything: APIs, SDKs, and the AWS CDK

In 2002, Jeff Bezos’s so-called API Mandate forced all Amazon teams to expose their data and functionality through service interfaces. Amazon has built AWS around the same principles: every service is programmatically controllable, from starting a virtual machine to accessing a satellite. While this is an essential property of an effective cloud platform, it is not necessarily developer-friendly.

By now, AWS has incrementally and significantly improved in this space. Besides using their APIs, we can control services and infrastructure with a unified Command Line Interface (CLI), Software Development Kits (SDK), CloudFormation, and, since July 2019, the AWS Cloud Development Kit (CDK).

The CDK had a massive impact on developer productivity and satisfaction. While teams could already access and control services and infrastructure using the AWS SDK and their favorite programming language, infrastructure was primarily defined using incredible amounts of mostly punctuation marks and whitespace, also known as YAML or JSON. CDK — initially only flavored TypeScript and Python — finally gave developers an AWS-native means to define Infrastructure as actual Code. Since its introduction, AWS has added support for more languages, like Java, C#, and Go.

Metrics, toolsets, and communities

The lines between infrastructure and logical code have thus been blurred, creating more opportunities to increase business agility. And AWS provides more tools to increase development productivity, like metrics and purpose-built toolsets. New developments in this space are:

- Community support platforms like the newly announced re:Post and Construct Hub.

- Monitoring improvements like CloudWatch Real-User Monitoring and Metrics Insights.

- A Secrets Detector addition to the software engineer’s best non-human programming buddy, Amazon CodeGuru.

Reducing complexity

Another way to increase (business) agility is by reducing complexity. AWS seems very aware of the complexity we add when developing cloud-native solutions. It has released several offerings and improvements to its platform over the last period in reaction to this.

Less handcrafted code

We can reduce the amount of hand-crafted code we deploy in several areas (once more: code is a liability!). Business analysts can kickstart machine learning workloads using Amazon SageMaker Canvas, while others can visually create full-stack apps using Amplify Studio. More incremental improvements are enhanced dead-letter queue management for SQS and the Lambda and S3 enhancements mentioned earlier.

Feature flags and dark launches

Reducing operational complexity is a way to increase productivity. Modern organizations have learned to leverage feature flags and dark launches to test changes without introducing much operational overhead. Our colleague Nico Krijnen took the time to write down how Amazon CloudWatch Evidently and AWS AppConfig Feature Flags can help us in this regard.

Migration automation

Lastly, a powerful — but often not so straightforward — way to reduce complexity is to migrate existing workloads from the old data center to the cloud. AWS has been investing a lot of effort in this space and continues to do so, as evidenced by the recent releases of AWS Migration Hub Refactor Spaces, AWS Mainframe Modernisation, and AWS MicroService Extractor for .NET.

Sustainability of the cloud, sustainability in the cloud

Software engineering has more dimensions than speed and complexity. With the advent of DevOps, FinOps, and the increased awareness of IT infrastructure’s impact on our environment, teams are increasingly responsible for more than just developing and deploying code.

Adrian Cockcroft, Amazon’s newly appointed VP of Sustainability Architecture, recently headed a very insightful talk on architecting for sustainability. Amazon has committed to net-zero carbon by 2040, and AWS aims to use 100% renewable energy by 2025. AWS needs their customer’s help to decrease their footprint, so AWS has introduced several sustainability tools and improvements to their platform.

The Customer Carbon Footprint Tool was released earlier this month, enabling AWS users to calculate the carbon emissions their workloads produce now and in the future. Reducing them is the next logical step, which is why AWS added a new Sustainability Pillar to their Well-Architected Framework. More concretely, teams optimize resource usage by choosing several new CPU instances for EC2 or Lambda or by analyzing the enhanced AWS Compute Optimizer infrastructure metrics and acting accordingly.

Survival of the fittest

AWS’s cloud platform is becoming more mature, stable, developer-friendly, and sustainable while at the same time evolving rapidly to meet emerging business needs. By closely following the needs of its users and experimenting and growing with them, AWS keeps delivering the platform of the future.

For us cloud-native developers, that’s excellent news. Increasing business agility by creating and evolving event-driven, serverless, sustainable systems that can turn on a dime in response to customer needs: it’s what we need now and going forward.

[related_post id=”24865″ name=”Training Continuous integration/Continuous delivery” cta=”Learn from our experts”]

Want to know more about what we do?

We are your dedicated partner. Reach out to us.