Exploring the concept behind Azure’s next-generation ingress platform

When Ingress Becomes Strategic

There’s a moment in every Kubernetes journey when ingress stops being just a configuration detail and becomes an architectural constraint. You start with a small cluster, maybe one or two microservices, and the standard NGINX ingress controller does exactly what you expect: simple routes, basic certificates, nothing fancy. Then the platform expands. You integrate workloads across multiple regions, extend environments with Azure Container Apps, and add a service mesh, and suddenly that “temporary ingress setup” becomes a bottleneck.

Ingress represents the intersection of your cloud environment, governance, and users. It’s the point where security policies align with developer freedom, and where latency, observability, and compliance converge. In essence, this is where complexity emerges.

Azure’s Application Gateway for Containers (AGC) serves as a new managed ingress category, rather than replacing NGINX or Traefik. It is not merely another controller for Kubernetes, but Azure’s effort to redefine ingress within a comprehensive, cloud-native delivery system that extends across clusters, workloads, and networks.

From Controllers to Cloud Services

To grasp AGC’s conceptual significance, let’s revisit how we arrived here. Kubernetes ingress has been both powerful and limited: it simplifies complex Layer 7 routing declaratively, yet it also expects you to handle the data plane on your own.

For most organizations, this involves deploying open-source controllers like NGINX, HAProxy, or Traefik as pods within the cluster. These controllers are flexible and extensible, but they also bring back many operational challenges you aimed to avoid with managed Kubernetes, such as patching, scaling, certificate renewal, configuration drift, and the ongoing question of “who owns this part of the puzzle.”

Azure’s initial effort to address this issue was the Application Gateway Ingress Controller (AGIC). AGIC linked AKS with Azure Application Gateway v2, enabling ingress rules to be specified via Kubernetes objects that configured the Application Gateway. While functional, it depended heavily on maintaining synchronization between the cluster state and external infrastructure, involving a controller, a sync loop, and a translation layer.

Application Gateway for Containers eliminates the middle step. It’s not a controller communicating with Azure; instead, it’s Azure built from the ground up to natively support Kubernetes. Rather than configuring an external gateway, AGC interprets Kubernetes Gateway API resources directly. This creates a managed, multi-tenant ingress layer that is aware of the cluster topology, namespaces, and policies, without requiring you to run any pods.

The Broader Shift: From Ingress to Gateway API

AGC’s core design centers on the Gateway API, a modern Kubernetes networking standard intended to replace the traditional Ingress API. More than just an update, the Gateway API represents a fundamental shift in approach. Unlike Ingress, which was deliberately minimalistic, the Gateway API is designed to be expressive. It distinctly divides responsibilities among infrastructure owners, application developers, and service operators, clarifying each group’s control areas.

The concept is straightforward yet impactful:

• Developers specify HTTPRoutes that link paths to services.

• Operators set up Gateways to control traffic entry points, exposed listeners, and policies.

• Platform teams create GatewayClasses that specify the underlying implementation, such as a cloud service like AGC, a mesh component, or an in-cluster controller.

AGC aligns perfectly with this model, serving as Azure’s managed implementation of a GatewayClass. It offers a declarative, Kubernetes-native interface to Azure networking. Practically, you can specify routing and policies using YAML, while the Azure control plane handles the complex tasks like TLS termination, scaling, and observability.

By integrating directly with the Gateway API, AGC is not just a proprietary ingress layer but a standards-based, cloud-ready gateway. It uses the same protocols as Kubernetes, ensuring compatibility across environments and remaining future-proof as the ecosystem shifts away from traditional Ingress.

How Application Gateway for Containers Fits In

Conceptually, AGC sits at a very specific intersection:

Between Azure’s Layer 7 networking fabric and Kubernetes’ declarative configuration model.

In a traditional setup, the ingress controller for your cluster resides within the Kubernetes network boundary. It monitors Ingress or Gateway resources, sets up routes, and terminates connections directly on the cluster nodes. While this is effective, it also couples your control plane and data plane. As a result, when you scale nodes, replace instances, or rotate certificates, the ingress layer is affected.

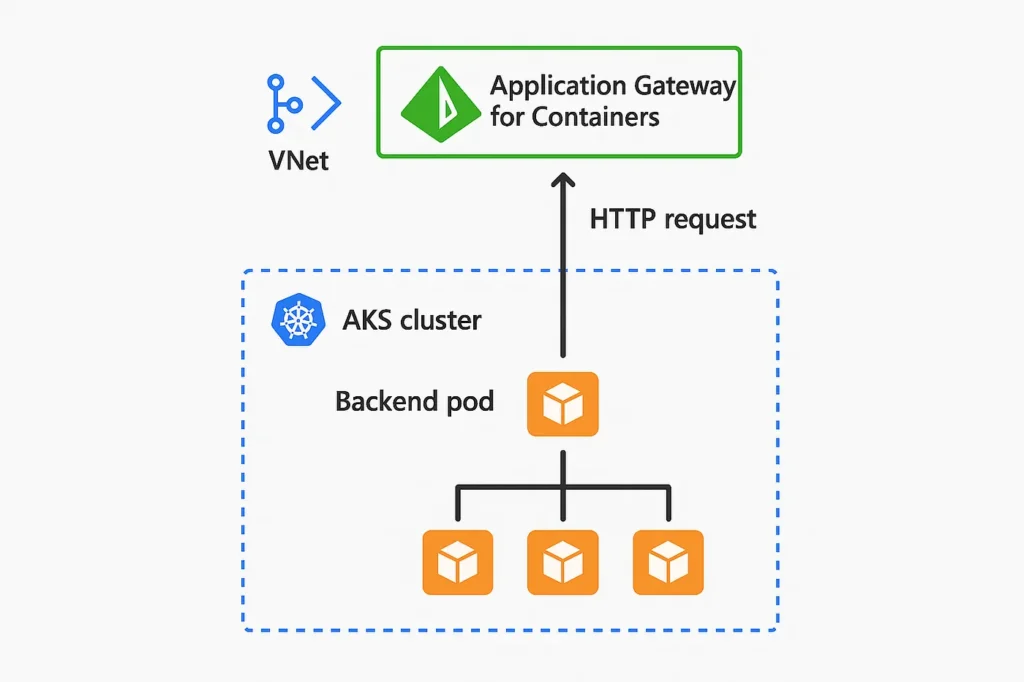

AGC separates these concerns by functioning as a managed Azure resource, not as a workload. It is aware of your clusters but does not depend on them for ensuring availability. Traffic first reaches the Application Gateway for Containers, where routing, WAF policies, and TLS termination happen, then it is securely directed to your backend pods.

This separation has significant implications for scalability, resilience, and security:

• Scaling your cluster no longer impacts your ingress throughput.

• Security policies, such as WAF rules or Private Link settings, are managed in Azure Policy rather than as Kubernetes ConfigMaps.

• Network isolation is simplified because the ingress tier sits outside your compute plane but remains within your virtual network perimeter.

It’s ingress-as-a-service, but one that still behaves like a native Kubernetes component.

Design Principles and Conceptual Architecture

At its core, Application Gateway for Containers is shaped by three architectural principles:

1. Kubernetes-Native by Design

AGC directly consumes Kubernetes resources. Gateways, Routes, and Backends are configured in YAML, managed through GitOps pipelines, and validated with standard Kubernetes admission workflows. There’s no need for external synchronization or manual setup. You define your intent; Azure guarantees execution.

2. Separation of Control and Data Plane

The cluster no longer handles the ingress data path. The Application Gateway for Containers runs in Azure’s managed environment, handling routing, inspection, TLS, and WAF, while your AKS cluster concentrates only on workloads. This separation makes upgrades easier and enables independent scaling of compute and ingress components.

3. Shared, Multi-Cluster Ready Infrastructure

AGC is fundamentally designed for multi-tenancy. One gateway instance can serve multiple clusters, each within its own namespace and policy boundaries. This enables new topology options such as hub-and-spoke ingress, shared governance models, or centralized security enforcement across numerous clusters.

Together, these design principles create a pattern that resembles Azure Front Door combined with Kubernetes Gateway rather than “NGINX in disguise.” It’s a true dual citizen of both worlds: Kubernetes and Azure networking.

Why This Matters for Platform Teams

For platform teams, ingress has traditionally been a balancing act between flexibility and control. Developers desire autonomy: they want to deploy microservices that work smoothly without needing firewall change requests. Conversely, security teams seek consistent enforcement, centralized visibility, and defined ownership.

AGC serves as a link connecting those two worlds.

Since it’s declarative and aware of Kubernetes, developers can continue to define routes, annotations, and service dependencies. However, enforcement is handled by Azure’s managed ingress layer, which is governed by enterprise-grade policies. This allows for standardization of WAF rules, TLS policies, and logging configurations across the organization without reducing flexibility.

It’s an architecture that supports federated governance: local autonomy within global guardrails.

For operations teams, this greatly simplifies their work. There’s no need to scale or patch an ingress controller, monitor a reverse proxy, or manually rotate SSL certificates. All components are Azure-native resources, observable via Azure Monitor, and managed by Azure Policy.

And for security teams, the advantage is equally clear: ingress now operates within the same trust boundary as the rest of Azure networking. Traffic can be inspected, audited, and traced using familiar tools without losing the context of the Kubernetes workloads behind it.

Observability and Policy Integration

Observability has long been a hidden challenge in ingress management. When the ingress controller operates within the cluster, its metrics are simply additional Prometheus targets. This setup works until you need to correlate those metrics with Azure networking logs, Application Insights traces, or WAF alerts.

Since AGC is part of the Application Gateway family, it benefits from Azure’s comprehensive observability tools. Metrics like request counts, latency, and response codes are automatically integrated into Azure Monitor. Security insights, including WAF events, blocked requests, and signature updates, are also accessible through the same portal and APIs used for traditional Application Gateways.

From a design standpoint, ingress observability now seamlessly integrates with your overall Azure monitoring approach. You can trace an end-user request from the public IP to the backend pod, crossing both Azure and Kubernetes boundaries, all within the same tool.

On the governance side, AGC’s integration with Azure Policy enables administrators to maintain uniform configuration across all environments. For instance, it ensures that all gateways use HTTPS listeners, that WAF is set to prevention mode, and that deployments exposing plain HTTP endpoints are blocked. Previously, such policies were enforced via admission webhooks or custom controllers, but now they are managed at the platform level, making them consistent, auditable, and organization-wide.

Conceptual Alignment with Modern Platform Engineering

One of the most intriguing aspects of AGC is not its technical details but its cultural implications. It showcases the increasing overlap between platform engineering and cloud networking.

Traditionally, in Kubernetes operations, platform teams concentrate on internal cluster functions such as service meshes, observability, and developer self-service. Conversely, network and security teams manage external components such as gateways, firewalls, DDoS mitigation, and policy enforcement. These two domains often encounter challenges when their responsibilities intersect, especially during incidents.

AGC dissolves traditional boundaries by offering a network-native service that communicates with Kubernetes. Practically, this allows platform engineers to declare their networking goals, while network teams maintain control over enforcement. Each team operates within its expertise, either Kubernetes or Azure, yet they access a unified control interface. This isn’t merely a technical upgrade; it fosters organizational harmony. Specifically, it transforms ingress into a shared responsibility instead of a source of conflict.

Multi-Cluster, Multi-Region, and Hybrid Thinking

The future of Kubernetes involves multiple clusters rather than a single large one. As platforms mature, they tend to split into regional, isolated environments, or hybrid setups that integrate AKS with edge or on-prem workloads. Traditional ingress controllers weren’t built for this complexity, as they assumed a single cluster boundary.

AGC, in contrast, considers multi-cluster ingress as a primary feature. Since it operates within Azure’s control plane, a single Application Gateway for Containers can handle traffic for multiple clusters. Each cluster registers its namespaces and routing rules, but all traffic passes through a single, centralized managed ingress layer.

For enterprises implementing hub-and-spoke architectures, this approach is a game-changer. Instead of setting up separate ingress controllers for each environment, you can deploy one or two AGC instances within a shared network hub and link multiple AKS clusters for development, testing, and production across regions via Private Link or VNet peering. This method not only enhances operational efficiency but also reinforces governance by maintaining a single ingress policy uniformly across all environments.

Hybrid environments also gain advantages. AGC can function as the cloud-side ingress for clusters connected through Azure Arc or hybrid links, providing consistent governance for edge or on-premises Kubernetes deployments. The same Gateway API manifests are used regardless of the cluster’s location. This multi-cluster capability makes AGC more than just an ingress controller; it’s a comprehensive network service platform for large-scale Kubernetes connectivity.

Relationship to Other Azure Networking Services

While it may be tempting to view AGC as an extension of the existing Application Gateway or as a competitor to Front Door or API Management, it actually serves a different purpose. Azure Front Door primarily handles global, Layer 7 routing at the edge, making it ideal for distributing traffic across multiple regions and delivering content. API Management is centered on managing developer-facing APIs, overseeing their lifecycle, and handling authentication. Application Gateway for Containers operates nearer to the cluster boundary, where internal services adhere to enterprise policies.

Think of it as the “inner ingress” that complements Front Door’s “outer ingress.” In contemporary Azure setups, traffic typically moves from Front Door to AGC to AKS, with each layer managing a specific role: global routing, secure ingress, and workload processing. This layered structure follows the defense-in-depth principle. Each gateway provides additional visibility and control without repeating functions.

Security as a Core Capability

Security is integral to AGC’s design. It uses Application Gateway’s WAF engine to enable Layer 7 inspection at the managed ingress level. This allows organization-wide WAF policies to be enforced on containerized applications without requiring extra proxies or filters within the cluster.

TLS termination at the gateway separates certificate management from workload lifecycles. Integration with Private Link and Virtual Network ensures traffic remains within your Azure boundary, supporting compliance with strict regulations such as NIS2 or DORA for European workloads.

From a Zero Trust approach, AGC functions as a policy enforcement point, authenticating and inspecting traffic before it reaches your pods. When combined with workload identity and network policies in AKS, it contributes to a comprehensive end-to-end security strategy.

The Developer Experience: Declarative and Transparent

One of AGC’s most elegant features is what developers don’t need to do. They aren’t required to learn new Azure-specific APIs or deploy agents. Instead, they work with standard Kubernetes resources like Gateway, HTTPRoute, and Backend; AGC simply interprets these. To developers, it remains Kubernetes; to operators, it’s Azure networking with built-in compliance and observability.

This transparency is essential because it supports the GitOps approach. Developers specify their desired state, store it in Git, and the platform ensures implementation with no manual work or requests to external load balancer teams.

For platform engineers, AGC completes the GitOps cycle by allowing network ingress to be expressed declaratively, versioned with application code, and managed through the cloud infrastructure.

Conceptual Example: From Policy to Flow

Imagine a scenario: Your organization manages multiple AKS clusters, one for development in West Europe, one for staging in North Europe, and one for production in Central US. Previously, each cluster had its own ingress controller, TLS certificates, and slightly different configurations handled by different teams. Managing ingress inconsistencies took as much effort as deploying workloads.

With AGC, this changes: a single managed gateway in your hub VNet provides listeners for each environment. Each AKS cluster only defines its Gateway and Route resources, covering its namespace and services. Azure enforces organization-wide WAF policies, unified logging, and consistent TLS settings.

Development teams deploy YAML, while the platform ensures compliance. This results in a centrally managed ingress service that is locally consumed, a principle that made managed Kubernetes successful, now extended to Layer 7 networking.

Operational Benefits in Perspective

Operationally, AGC’s influence is subtle yet significantly impactful. It elevates ingress from a cluster-level issue to a platform-wide capability, leading to three key outcomes:

- Easier lifecycle management with no need for controller upgrades or image patching.

- Lower resource use, as ingress traffic no longer competes with the cluster for CPU or memory.

- A predictable cost model, where you pay for a managed service instead of facing unpredictable in-cluster overhead. It also simplifies incident response.

When traffic anomalies happen, begin your investigation with Azure Monitor, correlate WAF logs, and trace requests back to backend pods. Troubleshooting ingress becomes a straightforward process rather than a multi-tool puzzle.

This isn’t about replacing every controller; it’s about understanding when ingress maturity requires a managed, policy-based approach.

The Future of Cloud-Native Ingress

Looking ahead, Application Gateway for Containers (AGC) is more than just a new Azure service; it signifies a broader shift in cloud-native architecture. The trend is clear: shift operational complexity from the cluster to the managed layer, while keeping configurations declarative and portable.

Similar to how Azure Container Storage handles storage management and Azure Monitor manages observability pipelines, AGC manages the network edge. This reflects the same principle: enabling engineers to focus on intent rather than infrastructure.

As Kubernetes adopts the Gateway API as a standard, this approach is expected to become the default across cloud providers. Azure’s early focus on AGC gives it an advantage, blending open standards with platform stability.

Ultimately, AGC might serve as a unifying element connecting AKS, Container Apps, and Arc-enabled clusters, providing a consistent ingress experience across all Azure platforms.

Closing Reflection

Ingress has long been the quiet backbone of the Kubernetes ecosystem—imperceptible when functioning smoothly but glaringly obvious when issues arise. With Application Gateway for Containers, Azure redefines ingress as a strategic platform service rather than just an implementation detail.

It is Kubernetes-native, managed by Azure, declarative, policy-driven, flexible, and secure. This approach symbolizes the natural merging of networking, operations, and platform engineering, serving as a link between developer independence and enterprise oversight.

In many respects, AGC is more than just ingress; it reflects the future of how workloads are connected, secured, and monitored in a cloud-native environment, a world where developers specify intent and the platform.

Want to know more about what we do?

We are your dedicated partner. Reach out to us.